Introduction

If you've worked with LLMs like Claude or GPT-4o, you know getting good outputs requires iteration. You go back and forth with the AI, refining your request until you get what you need. This works great for exploration, but what if we could add structured reasoning to every step?

I built a proof of concept tool that injects DeepSeek R1’s reasoning for any given message into Claude’s output context as a prefill, effectively making Claude think that R1’s thoughts are its own before it writes anything. If you have uv installed, you can try it out with a single command:

uvx diffdev --frankenclaudediffdev is a basic command line tool I built to experiment with LLM workflows. To see why this is powerful, we need to first understand the difference between these two types of language models.

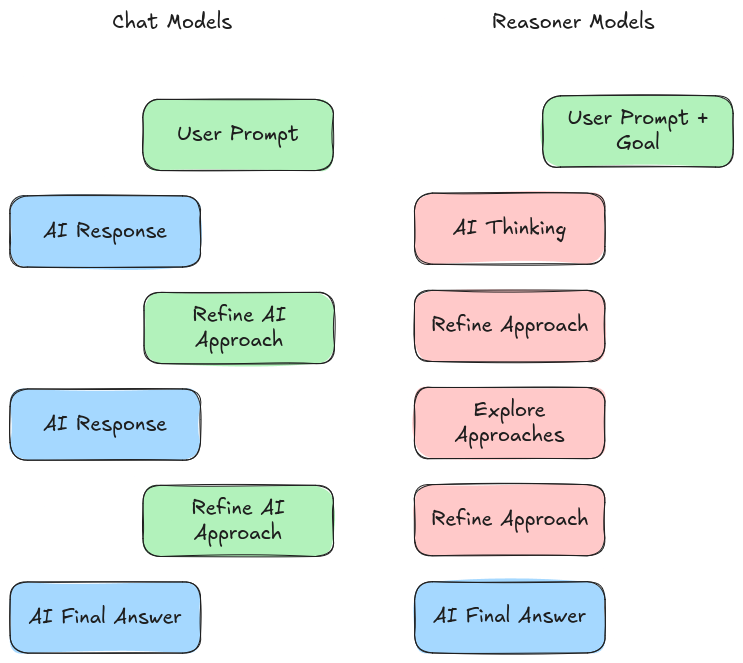

Chat vs. Reasoning Models

Late last year, OpenAI released o1, their reasoning model. While it might look like a chat model, it serves a different purpose. The key difference: chat models help when you're exploring undefined problems, while reasoning models excel when you have a clear goal and need structured analysis to reach it.

There’s nuance to it for sure, but that’s the gist of it. Latent Space did a fantastic deep dive on how to use reasoning models.

Key Models

DeepSeek, a side project from a Chinese quantitative ML fund, is outperforming western foundation labs significantly.

They’ve recently released two models:

DeepSeek V3: A chat model

DeepSeek R1: A reasoning model

In both cases they’ve shocked the AI community. This was Andrej Karpathy’s reaction to the V3 technical report:

R1 has shocked people in other ways. Namely, this is the first time that all of these things have simultaneously been true:

A non-American LLM is either tied or in the lead for the best model

The LLM is open source, MIT licensed (including using it for training data and autonomous weapons systems)

Every method to reproduce it was divulged in their technical report

Some are saying it's less censored than western models. I haven't verified this, but it wouldn’t surprise me given the obsession many American labs have with

censoringsafety training their models.

Separately, Claude stands out among AI enthusiasts for its natural conversation style while maintaining state-of-the-art (or near-SOTA) performance. This combination of intelligence and personability makes it perfect for iterative problem-solving.

This is distinctly different from the behavior that I’ve noticed out of reasoning models:

Ignores or even removes details that are not immediately relevant to the problem at hand (like a function in code that is necessary in your codebase but irrelevant to the coding task it’s trying to right now)

Multiple chat iterations do not inherently converge towards a solved problem if you don’t know what problem you are trying to solve is. I find chat models to be better at working with the human as a copilot rather than an “I’ll take it from here!” approach.

FrankenClaude Workflow

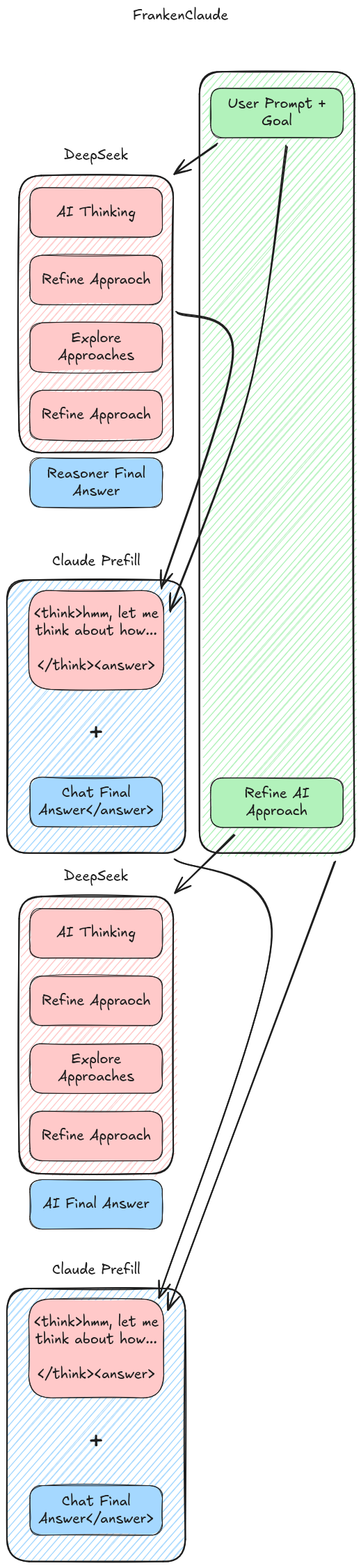

I created FrankenClaude to combine the best of both worlds: DeepSeek R1's reasoning with Claude's conversational abilities.

The idea is to treat each response from Claude as a new “focused problem” for R1 to chew on for a while, then feed that thought process directly into Claude. The thought here of course being that since R1 was trained to recognized better lines of reasoning, it can converge on a more logical approach to solve the problem as a precursor to Claude implementing a fix. Here's how it works:

User sends a message

DeepSeek R1 analyzes only the most recent message

R1's reasoning process (not its final answer) is captured

Claude receives the full conversation history plus R1's reasoning

Claude responds with both personal touch and structured analysis

This approach lets us maintain Claude's code consistency and attention to detail while adding R1's analytical capabilities.

Technical Considerations

The workflow uses a split context approach:

Claude gets the full conversation history (200K context window)

R1 only sees the latest message (64K context window)

This design choice was made for two reasons:

Cost efficiency: R1 costs $2/million tokens. Limiting its context reduces costs significantly.

Context window limitations: While we could give both models full context, we'd be limited to R1's 64K window.

Performance

Truth be told, it’s pretty tough to evaluate its performance in its current form. There are some major UX barriers to overcome for me to consider it useful. Between the reasoner model only having the context of a single message and the fact that there is no multi-line input is making it really tough to use it how I normally use LLMs these days. That being said, there was one instance where I was writing this article that surprised me.

In the past I’ve tried to use LLMs to accelerate my writing, but the writing always came out kind of average and not sounding very much like me at all. As a test prompt more than anything, I threw some of my ideas and ramblings for this article into FrankenClaude and told it to first write an outline, then write an article based off of that. For the first time ever, it managed to write an article that sounded like me.

Mind you, it was still closer to an outline than an article worthy of publishing (I would never stoop to the level of churning out AI slop). But the fact that what was there felt like something I would have written is something I have never experienced before and will likely be my method of outlining articles going forward.

Beyond that, a much more thorough way of testing FrankenClaude would be to stick it behind an API and point an agentic coding agent like Cursor or Cline at it. Using it to make in depth, detailed, repo wide changes to production code really is the only way to properly battle test new tools like this.

Final Thoughts

It's currently a proof of concept, but it demonstrates the potential for combining different model types to enhance AI interactions. The split context approach, while not ideal, offers a practical balance between cost, capability, and implementation complexity. There are other approaches being tried right now. [Some people](https://github.com/martinbowling/thoughtful-claude) are already experimenting with giving Claude an MCP server that lets it call out to an external reasoner when it needs to.

I suspect the final solution will look similar to that moving forward, but for now you can get a glimpse at the future by running:

uvx diffdev --frankenclaude