GPU Analysis with OpenAI's DeepResearch

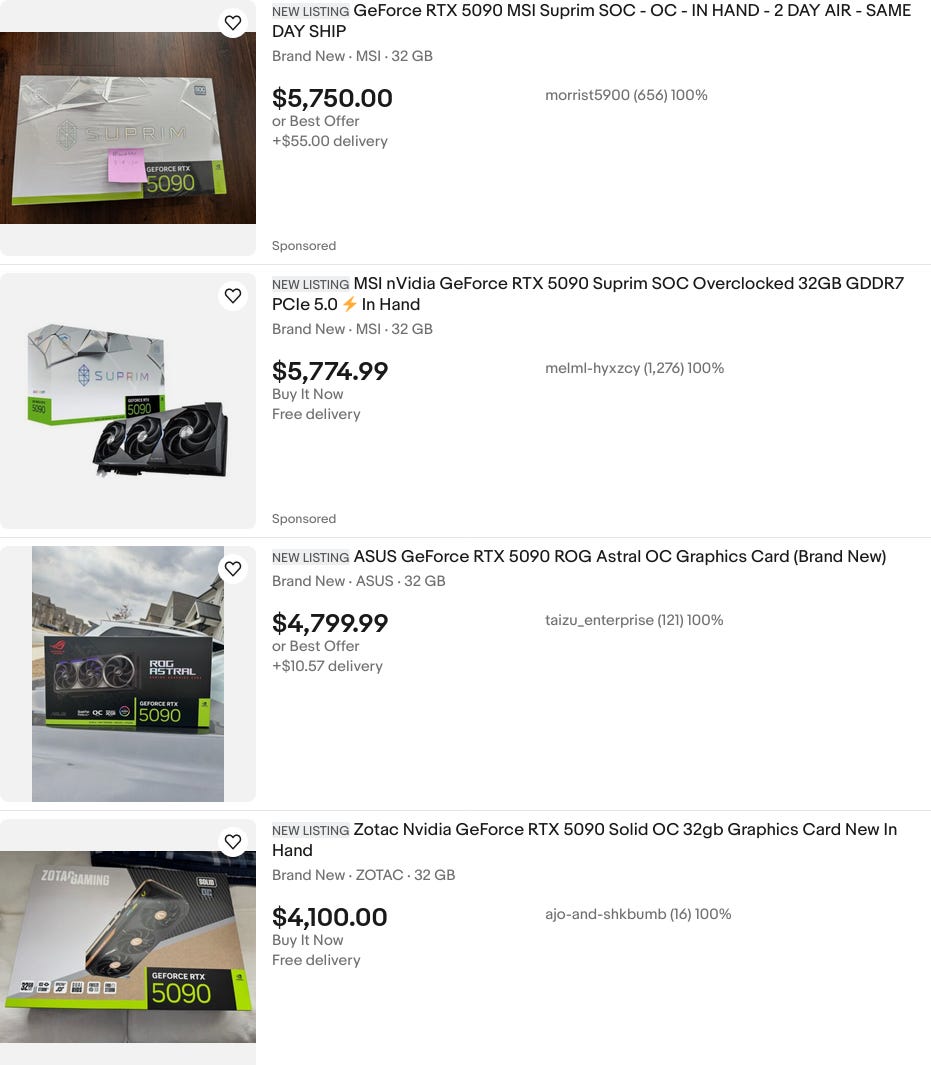

I am GPU poor. I have a 1080ti graphics card and it doesn’t look like that will be changing anytime soon given the current prices of graphics cards:

As a result I need to learn how to work with what I’ve got. Yeah sure I could rent a cloud GPU instance, but I don’t want to be SSHing into a remote box for weeks on end and transferring datasets back and forth if I don’t have to. I know there’s been a ton of advancements in GPU tech over the past few years, but much of the information is scattered. If I want to know all of the ways to really take advantage of my hardware it would be really helpful to have all of the information in one place (if only to paste into am LLM later down the road to help me out more accurately).

To learn about as many differences as I could, I went to OpenAI’s DeepResearch tool, which recently became available to Plus users, to see what it could come up with. This was the prompt I gave it:

I need a ton of information of the GTX 1080ti, particularly it's architecture in relation to something like an RTX 4090. I need to know it for the purposes of training and inferencing with AI models. There are some operators/methods that are supported on newer cards (floating point precision levels and tensor cores, for example) that are not on the 1080ti.

It responded with some clarifying questions:

To provide a thorough comparison of the GTX 1080 Ti and RTX 4090 in the context of AI model training and inference, could you clarify the specific details you need?

Are you looking for a general architectural comparison or a deep dive into CUDA core differences, tensor core support, and FP precision capabilities?

Do you need benchmark comparisons for AI workloads (such as PyTorch, TensorFlow, LLM inference, etc.)?

Are you interested in memory bandwidth, VRAM capacity, and their impact on AI processing?

Any particular AI model types (e.g., deep learning, generative models, reinforcement learning) you are focusing on?

Let me know so I can tailor the research accordingly!

To which I responded:

1. All of it

2. Yes benchmark comparisons would be nice, but I'm mostly focused on the entire array of actual hardware capabilities

3. Yes, but I need much more information than that

4. Transformers

I need the niches. The kind of information most people wouldn't care about or even think to look for. AI wasn't as popular when the 1080ti was released, but some people were playing with it even back then. I need to know the theoretical capabilities of both cards given every aspect of their hardware. Take this page on the 5090 for example:

(an example page that listed out a bunch of tech specs for the 5090)

I need as much if not more detail than this for the 1080ti and 4090

I chose the 4090 rather than the 5090 because I figured there would be more experimentation around it being posted on the internet. Within 8 minutes, DeepResearch had combed through 41 different sources and even used tools like a Python interpreter to verify some of the numbers (I assume, DeepResearch’s thought traces aren’t public):

This report was really helpful in that it helped me discover a couple problems with my training run for my self driving Rocket League AI project:

I thought I was using mixed precision on my 1080ti even though I wasn’t

If I had been using mixed precision like I had intended to, the training would be much slower given the 1080ti’s poor performance with FP16 operations (about 1/64th the speed of FP32)

I think these super technical deep dives are where DeepResearch can really shine. At some point I will probably need to come back to this report, so I’m posting it here for you all to read as well. Everything from here on out is the research report that DeepResearch generated. Enjoy!

Hardware Architecture Breakdown

Microarchitecture (Pascal vs Ada Lovelace): The GTX 1080 Ti is built on NVIDIA’s Pascal architecture (GP102 GPU), whereas the RTX 4090 uses the latest Ada Lovelace architecture (AD102 GPU) (Pascal (microarchitecture) - Wikipedia). Pascal (2016) was designed primarily for FP32 shading and general compute, with no dedicated AI or ray-tracing cores. Ada Lovelace (2022) is a far more advanced design, inheriting improvements from Turing and Ampere (e.g. Tensor Cores, RT Cores) and built on a much smaller silicon process, allowing dramatically more transistors and higher clocks (NVIDIA GeForce RTX 4090 Specs | TechPowerUp GPU Database). In practice, this means the 1080 Ti’s SMs (streaming multiprocessors) are simpler, while the 4090’s SMs are equipped with additional functional units specialized for AI and ray tracing tasks.

CUDA Cores and Specialized Units: The GTX 1080 Ti contains 3584 CUDA cores across 28 SMs (NVIDIA GeForce GTX 1080 Ti Specs | TechPowerUp GPU Database), with no separate tensor or ray-tracing cores. In contrast, the RTX 4090 has 16,384 CUDA cores on 128 SMs (NVIDIA GeForce RTX 4090 Specs | TechPowerUp GPU Database) (NVIDIA GeForce RTX 4090 Specs | TechPowerUp GPU Database) – over 4.5× the count – plus 512 4th-gen Tensor Cores and 128 3rd-gen RT Cores dedicated to AI and ray tracing (NVIDIA GeForce RTX 4090 Specs | TechPowerUp GPU Database). The 1080 Ti relies solely on its CUDA cores for all computations, whereas the 4090’s Tensor Cores accelerate matrix-heavy operations common in AI workloads. (Each Ada SM includes 4 Tensor Cores (NVIDIA GeForce RTX 4090 Specs | TechPowerUp GPU Database), enabling massively parallel mixed-precision matrix multiply-accumulate operations.) The lack of Tensor Cores on Pascal means the 1080 Ti cannot leverage tensor math units for deep learning; it also has no RT cores (ray intersection units), though those are mostly irrelevant for AI training. The 4090’s CUDA cores themselves are also more capable per core, with architectural enhancements and higher clocks (boost ~2520 MHz vs ~1582 MHz on the 1080 Ti) (NVIDIA GeForce RTX 4090 Specs | TechPowerUp GPU Database) (NVIDIA GeForce GTX 1080 Ti Specs | TechPowerUp GPU Database), further widening the raw throughput gap.

Precision Support (FP32, FP16, FP64): Both GPUs natively support 32-bit floats (FP32) as the primary compute format. The 1080 Ti delivers ~11.3 TFLOPS of FP32 peak throughput (NVIDIA GeForce GTX 1080 Ti Specs | TechPowerUp GPU Database), while the 4090 delivers an enormous ~82.6 TFLOPS in FP32 (NVIDIA GeForce RTX 4090 Specs | TechPowerUp GPU Database) – roughly 7.3× higher. For FP16 half-precision, Pascal GPUs have only limited support: a Pascal SM’s CUDA core can in theory pack two FP16 operations into one FP32 core under specific conditions, but in practice the 1080 Ti sees little speedup with FP16 (The Best GPUs for Deep Learning in 2023 — An In-depth Analysis) (LLM Inference - Consumer GPU performance | Puget Systems). TechPowerUp specs even list the 1080 Ti’s FP16 rate as just 177 GFLOPS (essentially 1/64 the FP32 rate) (NVIDIA GeForce GTX 1080 Ti Specs | TechPowerUp GPU Database), indicating no meaningful half-precision throughput advantage on that GPU. The RTX 4090, by contrast, treats FP16 as a first-class citizen: its Tensor Cores deliver FP16 math at full speed (and then some). The 4090’s FP16 throughput via standard CUDA cores is 82.6 TFLOPS (1:1 with FP32) (NVIDIA GeForce RTX 4090 Specs | TechPowerUp GPU Database), but via Tensor Cores it is far higher – on the order of 330 TFLOPS+ for matrix ops (since each Tensor Core can perform an FMA on FP16 matrices each cycle, and there are 512 of them). This huge gap means the 4090 excels at mixed-precision training (FP16/FP32 accumulate), while the 1080 Ti sees only modest gains or even convergence issues if forced to 16-bit (The Best GPUs for Deep Learning in 2023 — An In-depth Analysis) (LLM Inference - Consumer GPU performance | Puget Systems). For FP64 (double precision), both are limited because they are consumer-class GPUs: the 1080 Ti’s FP64 rate is 1/32 of FP32 (~0.35 TFLOPS) (NVIDIA GeForce GTX 1080 Ti Specs | TechPowerUp GPU Database), and the 4090’s is 1/64 of FP32 (~1.29 TFLOPS) (NVIDIA GeForce RTX 4090 Specs | TechPowerUp GPU Database). Neither is intended for heavy FP64 workloads (that’s for Tesla/Quadro variants), but the 4090’s Tensor Cores can instead accelerate TF32 (a 19-bit precision format introduced in Ampere) and even support FP8 on Ada (Ada Lovelace (microarchitecture) - Wikipedia) – data types not available at all on Pascal.

Memory Configuration (VRAM and Bandwidth): The GTX 1080 Ti comes with 11 GB of GDDR5X VRAM on a 352-bit bus, providing ~484 GB/s bandwidth (NVIDIA GeForce GTX 1080 Ti Specs | TechPowerUp GPU Database) (NVIDIA GeForce GTX 1080 Ti Specs | TechPowerUp GPU Database). The RTX 4090 doubles capacity to 24 GB of newer GDDR6X on a 384-bit bus, with an effective bandwidth just over 1 TB/s (1008 GB/s) (NVIDIA GeForce RTX 4090 Specs | TechPowerUp GPU Database) (NVIDIA GeForce RTX 4090 Specs | TechPowerUp GPU Database). This ~2× memory size and ~2× bandwidth advantage is critical for large AI models, particularly transformer networks that store many weights and activations. Larger VRAM lets the 4090 keep much bigger models or batch sizes in GPU memory without running out-of-memory errors that a 1080 Ti would encounter at 11 GB. For example, certain transformer models that barely fit in 24 GB would have to use model partitioning or low batch sizes on an 11 GB card. Bandwidth-wise, transformers can be memory-intensive (e.g. attention mechanisms reading large key/value tensors), so the 4090’s higher memory throughput helps feed its compute units. Moreover, the 4090’s memory is GDDR6X, which has higher pin speed than the 1080 Ti’s GDDR5X, further reducing memory bottlenecks. In short, the RTX 4090 can cache and stream much more data for AI training, reducing the need to swap to host memory mid-epoch (which would be prohibitively slow on the 1080 Ti if a model doesn’t fully fit).

Cache Hierarchy (L1, L2 caches): NVIDIA greatly expanded on-chip cache in newer architectures. The Pascal GTX 1080 Ti has a 48 KB L1 cache per SM and a total of 2.75 MB L2 cache across the chip (NVIDIA GeForce GTX 1080 Ti Specs | TechPowerUp GPU Database) (NVIDIA GeForce GTX 1080 Ti Specs | TechPowerUp GPU Database). By contrast, the RTX 4090’s Ada architecture devotes a massive 72 MB L2 cache on the GPU (NVIDIA GeForce RTX 4090 Specs | TechPowerUp GPU Database) (NVIDIA GeForce RTX 4090 Specs | TechPowerUp GPU Database) – roughly 26× larger! Each of the 4090’s SMs also has a larger L1 cache (128 KB per SM) (NVIDIA GeForce RTX 4090 Specs | TechPowerUp GPU Database) (NVIDIA GeForce RTX 4090 Specs | TechPowerUp GPU Database). This huge cache differential means the 4090 can keep much more of the working set on-chip, drastically reducing expensive DRAM accesses for many workloads. For AI tasks like matrix multiplications in transformers, data can be tiled to re-use values from L2/L1; Ada’s large L2 (distributed across memory partitions) allows far more reuse. Pascal’s small caches, on the other hand, mean the 1080 Ti often has to fetch from VRAM more frequently during training, which can stall the CUDA cores if memory bandwidth or latency becomes a factor. In essence, Ada’s cache redesign helps “feed” its massive core count effectively, whereas Pascal is more likely to be memory bound once its tiny caches are exhausted. (It’s worth noting that Ampere/Ada’s cache boost was partly to offset only moderate GDDR6X bandwidth gains – in fact, AD102’s full 96 MB L2 was cut to 72 MB on the 4090 card (Microbenchmarking Nvidia's RTX 4090 - Chips and Cheese) (Ada Lovelace Whitepaper : r/nvidia - Reddit) – but even 72 MB is tremendous compared to Pascal’s 2–3 MB.)

PCIe Interface (Gen3 vs Gen4): The 1080 Ti uses a PCIe 3.0 x16 host interface (NVIDIA GeForce GTX 1080 Ti Specs | TechPowerUp GPU Database), while the 4090 supports PCIe 4.0 x16 (NVIDIA GeForce RTX 4090 Specs | TechPowerUp GPU Database). PCIe 4.0 doubles the theoretical transfer rate (~16 GB/s each way for x16 vs ~8 GB/s on PCIe 3.0). In practice, however, for most single-GPU training workloads this difference has minimal impact (The Best GPUs for Deep Learning in 2023 — An In-depth Analysis) (The Best GPUs for Deep Learning in 2023 — An In-depth Analysis). Once data is loaded into GPU memory, training/inference mostly runs on the device. A faster bus helps when streaming very large datasets or doing frequent host-device transfers (or if multiple GPUs communicate peer-to-peer across PCIe). But as one expert notes, for typical deep learning setups “PCIe 4.0…does not yield many benefits” unless you have multi-GPU parallelization or a GPU cluster (The Best GPUs for Deep Learning in 2023 — An In-depth Analysis). The 1080 Ti on PCIe 3.0 can already saturate most data input pipelines; the 4090’s PCIe 4.0 is more of a future-proofing and benefits specific scenarios (like multi-node training with GPU Direct RDMA, or when using the GPU for large data retrieval tasks). One caveat: the 4090 dropped NVLink support (present on some pro Ampere cards), so multi-GPU 4090 setups also rely on PCIe for inter-GPU communication. The 1080 Ti similarly had no NVLink (Pascal NVLink was only on Tesla P100/GP100), only old SLI for graphics which doesn’t help ML. Thus, both are limited to PCIe for multi-GPU memory exchange – an area where the 4090’s Gen4 and larger VRAM give it an edge when scaling out.

AI Training & Inference Capabilities

Tensor Core Support and Mixed Precision: One of the biggest differentiators is Tensor Core support. The GTX 1080 Ti has no Tensor Cores, so it must execute all deep learning math on traditional CUDA cores. The RTX 4090’s 512 Tensor Cores (Ada 4th-gen) enable mixed-precision training (FP16 or BF16 with FP32 accumulate) and specialized tensor operations at very high throughput (Ada Lovelace (microarchitecture) - Wikipedia). For example, matrix multiplications – the core of transformer networks (Q·K^T attention scores, dense layers, etc.) – can be offloaded to Tensor Cores on the 4090, achieving many times the throughput of FP32 CUDA cores. Pascal cards can perform FP16 math but without dedicated units; at best the 1080 Ti could pack two FP16 ops per cycle on one CUDA core, giving a very modest speedup (in one test, 1080 Ti was only slightly faster in FP16 mode than FP32) (Four generations of Nvidia GPUs compared | Computer Vision Lab). In fact, modern frameworks observed that the 1080 Ti’s FP16 performance is “anemic” for training (LLM Inference - Consumer GPU performance | Puget Systems). This manifests in real workloads: for instance, in an LLM inference benchmark (using FP16/INT4 quantization for a Llama model), the 1080 Ti was 5× slower than even a low-end RTX 4060, simply because it lacks tensor cores and had to brute-force the math in FP32/FP16 on CUDA cores (LLM Inference - Consumer GPU performance | Puget Systems) (LLM Inference - Consumer GPU performance | Puget Systems). With the 4090, NVIDIA’s 4th-gen Tensor Cores bring support for FP16, BF16, INT8, and even experimental FP8 data types with mixed-precision accumulation (Ada Lovelace (microarchitecture) - Wikipedia). They also incorporate the sparsity feature introduced in Ampere (where 2:4 structured sparsity in weights can double throughput) (Ada Lovelace (microarchitecture) - Wikipedia). None of these benefits apply to the 1080 Ti. In summary, the 4090 is built to excel at mixed-precision; the 1080 Ti can only do full FP32 (or risk lower accuracy with limited FP16). As a result, training in FP16/FP32 mixed mode (enabled by frameworks’ AMP autocast) yields substantial speedups on the 4090, whereas the 1080 Ti would see negligible gains but still use nearly the same memory as true half precision.

Compute Capability and Framework Support: The 1080 Ti is Compute Capability 6.1, while the RTX 4090 is Compute Capability 8.9 (Ampere was 8.6/8.7, Ada is slightly higher). The newer compute capability reflects support for newer instructions and data formats. For example, CC 7.0+ (Volta/Turing) was required for Tensor Core WMMA instructions; CC 8.0 (Ampere) added TensorFloat-32 and BF16 support on tensor cores; Ada’s 8.9 adds FP8 support (as seen in CUDA 12.x) (Ada GeForce (RTX 4090) FP8 cuBLASLt performance). What this means for frameworks like PyTorch, TensorFlow, cuDNN and TensorRT is that the 4090 can leverage the latest kernels and optimizations, whereas the 1080 Ti may be limited to older code paths. cuDNN (NVIDIA’s deep learning primitives library) will use Tensor Core-accelerated kernels on the 4090 for convolutions, GEMMs, etc., but on a 1080 Ti it will fall back to legacy implementations (often FP32 or using slower Winograd algorithms). Similarly, TensorRT can do INT8 acceleration on the 4090 (using its tensor cores for INT8 matrix ops), but on Pascal, INT8 is only supported via a slower fallback. (Notably, Pascal GP102 did introduce an INT8 dot product instruction called DP4A (1. Pascal Tuning Guide — Pascal Tuning Guide 12.8 documentation) – the GTX 1080 Ti can perform 8-bit dot products on its CUDA cores with throughput equal to FP32 operations. In fact, NVIDIA’s Tesla P4 (Pascal) was marketed for INT8 inference. However, even with DP4A, the 1080 Ti tops out at on the order of ~45 INT8 TOPS, whereas the 4090’s tensor cores achieve >660 INT8 TOPS (or ~1320 TOPS with sparsity) (1. Pascal Tuning Guide — Pascal Tuning Guide 12.8 documentation) (RTX 4090 GPU Rental | Vast.ai) – nearly two orders of magnitude higher.) In effect, the 4090’s higher compute capability brings full compatibility with modern AI techniques: BF16 training (widely used in transformers) is supported, whereas 1080 Ti cannot do native BF16. CUDA libraries have also evolved – some recent features (like CUDA graphs, certain multi-GPU communication features, or fused attention kernels) target compute 7.x/8.x GPUs; the 1080 Ti might not benefit or may even lose support in future software updates. Currently, both GPUs are supported by NVIDIA’s drivers and CUDA 12, but the 1080 Ti is an “old generation” so future framework versions may eventually deprecate CC6.x. In contrast, the 4090 will enjoy support for years and is aligned with the latest NVIDIA AI software stack (including optimizations for transformers, such as the FasterTransformer library and transformer engine features that utilize FP8/FP16 – none of which the 1080 Ti can use).

AI Performance Benchmarks: The practical impact of the above hardware differences is massive. Across a variety of neural network workloads, the RTX 4090 handily outperforms the GTX 1080 Ti by several factors. In one set of tests spanning vision and NLP models, the 4090 delivered on average ~6× the training throughput of the 1080 Ti in FP16 precision (Four generations of Nvidia GPUs compared | Computer Vision Lab). For example, on ResNet-50 training, a GTX 1080 Ti achieves around 185 images/sec (FP32) or 235 img/s (FP16) with a large batch size, whereas the RTX 4090 reaches ~721 img/s (FP32/TF32) and ~1285 img/s (AMP FP16) on the same task (Four generations of Nvidia GPUs compared | Computer Vision Lab) (Four generations of Nvidia GPUs compared | Computer Vision Lab). That is roughly a 5.5× increase in FP16 training speed for ResNet-50. The gap grows with model complexity: on a transformer-based Swin-Transformer (swin_base) inference, the 4090 processed 1692 samples/s vs only 152 on 1080 Ti – over 11× faster (Four generations of Nvidia GPUs compared | Computer Vision Lab). Even in FP32 (no tensor cores), the 4090 tends to be 3–4× faster than 1080 Ti (Four generations of Nvidia GPUs compared | Computer Vision Lab) due to sheer core count and clock speed. But once Tensor Cores are in play, the 4090 can be 5–7× faster in training throughput on CNNs and even more on transformer models (which heavily use matrix multiplies) (Four generations of Nvidia GPUs compared | Computer Vision Lab) (LLM Inference - Consumer GPU performance | Puget Systems). Another example: BERT-base fine-tuning, which involves many GEMMs – the 1080 Ti might train at only ~2–3 sequences/sec in FP32, whereas the 4090 in mixed precision can fine-tune at an order of magnitude higher rate (numbers vary by implementation, but one Lambda Labs benchmark showed ~137 seq/s on 4090 vs 85 seq/s on a 3090 in BERT fine-tuning (NVIDIA GeForce RTX 4090 vs RTX 3090 Deep Learning Benchmark); the 1080 Ti would be far below 3090). For inference of large language models, the difference is equally stark. Puget Systems found that processing a prompt on a quantized Llama model took the 1080 Ti five times longer than even a budget Ada GPU (LLM Inference - Consumer GPU performance | Puget Systems). The 1080 Ti simply cannot execute INT8/FP16 fast enough to keep up with modern large models (it often has to use FP32 or spill to CPU). Meanwhile, the 4090 can utilize INT8 acceleration (for example, INT8 GPT-3 175B inference frameworks can achieve decent speed on 4090 whereas 1080 Ti would be impractically slow if it fit at all). FLOPs-wise, the 1080 Ti’s ~11 TFLOPS vs 4090’s ~82 TFLOPS (FP32) already implies ~7.5× raw difference, but for AI matrix math the effective gap is much larger – the 4090 can exceed 330 TFLOPS of dense FP16 and even reach 1.3 PFLOPS on FP8/INT8 tensor operations (Anyone was able to use FP8 with the new rtx 4090? : r/nvidia - Reddit) (Ada Lovelace (microarchitecture) - Wikipedia), whereas the 1080 Ti is stuck executing ~11 TFLOPS at best regardless of reduced precision. This translates to the 4090 training large transformers or CNNs several times faster, and being able to handle real-time inference for models that would overwhelm a 1080 Ti.

Memory Bottlenecks and Model Size: Large transformer models (with hundreds of millions or billions of parameters) are extremely memory-hungry. The 11 GB of the 1080 Ti severely limits the size of models or batch that can be trained or inferred without out-of-core memory techniques. For instance, a BERT-Large fine-tuning might barely fit into 11 GB at small batch sizes; a GPT-2 XL (1.5B) model likely cannot fully load in 11 GB in FP16 (requires ~12 GB or more with overhead), meaning the 1080 Ti would need to use slower strategies (like model splitting across CPU/GPU or gradient checkpointing to trade compute for memory). The RTX 4090’s 24 GB easily accommodates these models and even allows some of the new 13B+ parameter models to be loaded if using FP8 or 4-bit quantization. For training, the larger memory lets 4090 use higher batch sizes, which improves GPU utilization and convergence stability. The 1080 Ti often forces smaller batches, which not only runs slower (less parallelism) but can degrade model quality or require more training steps. Moreover, the 1080 Ti can’t cache as much data, so it may spend more time doing data transfers (if dataset is large) or flushing activations to host memory if doing gradient checkpointing. The 4090 also benefits from higher memory bandwidth and huge L2 cache to keep those massive models fed; the 1080 Ti, even when it can fit a model, might become memory-bound when the working set doesn’t fit cache (for example, large attention matrices could cause many VRAM transactions). Interestingly, one benchmark noted that pure memory bandwidth is not everything – despite the 1080 Ti’s decent 484 GB/s, its lack of FP16 compute made it underperform GPUs with lower bandwidth but higher FP16 throughput on token generation (LLM Inference - Consumer GPU performance | Puget Systems) (LLM Inference - Consumer GPU performance | Puget Systems). Still, if a model does exceed GPU memory, the 4090’s extra VRAM can avoid the drastic slowdowns from spilling to system RAM. Overall, the 4090 vastly reduces memory bottlenecks for training giant transformer models compared to the 1080 Ti; it enables research on large networks on a single GPU that would have been impossible or painfully slow on the Pascal card.

Software and Compatibility

CUDA, cuDNN, and Framework Support: Both GPUs are supported by NVIDIA’s software stack today, but the level of support and optimization differs. The RTX 4090 being a current-generation device is fully optimized in the latest CUDA 11/12 toolkits and cuDNN releases. Developers can compile kernels for compute capability 8.9 to take advantage of Ada features. Frameworks like PyTorch and TensorFlow include tuned kernels for Ampere/Ada GPUs – for example, convolution and GEMM kernels that use Tensor Cores (via WMMA instructions) and block-sparse operations. On Pascal (compute 6.1), those modern code paths are disabled or not applicable. Instead, the 1080 Ti will use older cuDNN kernels (often FP32 or FP16 pseudo-compute on FP32 cores) that are less efficient. Certain new features in deep learning frameworks also assume Turing/Ampere or later; for instance, automatic mixed precision (AMP) in PyTorch will auto-cast to FP16/BF16 and leverage Tensor Cores on GPUs that have them – on a 1080 Ti, AMP can still reduce memory by using FP16 for storage, but it won’t see the massive speed boost (the actual math will run in FP32 or inefficient FP16). Another consideration is driver support: the 1080 Ti requires NVIDIA’s “Game Ready” or Studio drivers but it’s from 2017, so it’s nearing the end of mainstream support. NVIDIA typically maintains support for about 5 years for an architecture in the latest drivers, after which only critical fixes come. Ada, being new, will receive ongoing driver optimizations specifically targeting AI workloads (e.g. better scheduling, fewer overheads for large models). TensorRT, used for optimized inference, includes many tactics (fused kernels, INT8 calibration, etc.) that expect tensor core support. Running TensorRT on a 1080 Ti is possible, but many fast int8 modes won’t be available – effectively you’d be using it in FP32 mode, forfeiting much of its acceleration. In short, the RTX 4090 has full compatibility with current and next-gen AI software, whereas the GTX 1080 Ti is now a legacy card that, while still CUDA-capable, won’t be able to make use of many modern performance features in frameworks.

Optimizations for Transformer Models: NVIDIA has put significant effort into optimizing transformer model training and inference (owing to the popularity of BERT, GPT, etc.). The RTX 4090 benefits from these innovations. For example, NVIDIA’s Transformer Engine (introduced with Hopper, but aspects available to Ampere/Ada) dynamically switches between FP16 and FP8 to maximize speed while preserving accuracy – the 1080 Ti cannot partake in this, as it lacks the hardware for FP8/BF16. Libraries like FasterTransformer leverage tensor cores, large shared memory, and multi-stream concurrency to speed up transformer layers; these assume at least Turing/Ampere GPUs. The 4090 also supports the simultaneous execution of inferencing and copy operations more efficiently, which can be useful in pipelines serving transformer models (e.g. overlapping data preprocessing on CPU with GPU compute). While the 1080 Ti can run transformer models (indeed it was used for pioneering BERT implementations in 2018), it often requires workarounds: reduced sequence lengths, operations partitioned to avoid using too much memory, or running attention on smaller blocks due to memory constraints. Large-scale inference on a 1080 Ti is challenging – for example, serving a 6B parameter GPT-J model would require model parallelism across GPUs or offloading weights layer-by-layer from CPU, incurring latency that the 4090 with its ample VRAM and fast GPU compute can avoid. Also, new optimization techniques like flash attention (an optimized GPU memory-level implementation of attention) and fused multi-head attention kernels are available in latest software but typically tuned for SM7.x/8.x GPUs; the 1080 Ti might not get these kernels, or if it does, they won’t run as fast without tensor core usage. Summarily, the 4090 is well-suited to large transformers out-of-the-box – it can utilize cutting-edge fused kernels and mixed precision to maximize throughput – whereas the 1080 Ti will struggle and likely require custom adjustments to even run huge transformer models, let alone in an optimized fashion.

Driver and Ecosystem Differences: Being a newer card, the RTX 4090 also introduces support for newer technologies like PCIe Resizable BAR (allowing CPU direct access to full VRAM, useful in some data-loading cases) and has an updated NVENC/NVDEC video engine (useful if doing video AI or encoding results). The 1080 Ti’s video encode/decode is older (no AV1 support, etc.), though that doesn’t directly affect training except in video data pipelines. The power management and thermal design of the 4090 is also oriented toward sustained AI load; it’s a 450 W card and will draw heavy power under continuous training, but its cooling is robust. The 1080 Ti (250 W) will draw less power but also has less compute to show for it – efficiency per training throughput is actually in favor of the 4090 in many cases (the 4090 achieves far more FLOPs per watt thanks to architectural efficiency improvements) (NVIDIA GeForce RTX 4090 vs RTX 3090 Deep Learning Benchmark) (NVIDIA GeForce RTX 4090 vs RTX 3090 Deep Learning Benchmark). On the software side, users of the 1080 Ti today might encounter memory fragmentation or driver issues when using the latest frameworks, simply because testing focus is now on newer GPUs. Meanwhile, NVIDIA often provides specific tuned deep learning libraries for current GPUs (for example, cuDNN’s most optimized path targets Ampere/Ada tensor cores, and older GPUs won’t see those speed-ups). It’s also worth noting that the RTX 4090, despite being a “GeForce” card, can run many professional and HPC stacks (like CUDA-enabled HPC applications) with nearly the same software features as a Tesla except ECC memory. The 1080 Ti similarly can run those, but one software feature it lacks is support for virtualization (SR-IOV or MIG-like capability) that newer datacenter GPUs have – not relevant to most users, but a sign of its older design. All in all, developers will find better and longer support for AI frameworks on the 4090, and more room to leverage upcoming software enhancements for AI.

Niche Details and Theoretical Limits

Undocumented/Quirky Performance Characteristics: Each architecture has its own quirks. Pascal GPUs like the 1080 Ti, for instance, have a feature where global memory accesses by default skip the L1 cache (caching in L2 only) unless a special flag is used (1. Pascal Tuning Guide — Pascal Tuning Guide 12.8 documentation) (1. Pascal Tuning Guide — Pascal Tuning Guide 12.8 documentation). This was an optimization for graphics workloads but could hurt certain compute patterns – developers had to explicitly opt-in to L1 caching for better performance on data reuse. Newer architectures (Turing and beyond) simplified this. The Ada-based 4090 likely caches more aggressively by default, benefiting AI workloads with locality. Another consideration: the 1080 Ti’s SM can issue either an FP32 or an INT instruction per clock per warp, not both simultaneously. Starting in Turing/Ampere, the architectures can execute integer instructions (for addressing, loop indexing) concurrently with FP32 on separate pipelines. This means Ada can better utilize its units, whereas Pascal might see minor stalls when, say, performing address calculations for sparse operations. While not usually a major factor in dense AI math, this could affect certain kernels. Also, Ada introduced Shader Execution Reordering (SER) for ray tracing, which doesn’t directly apply to AI, but the underlying scheduling improvements could make the GPU a bit more efficient at handling divergent workloads (maybe minor gains in irregular GPU compute tasks). The 1080 Ti, being older, also lacks any hardware scheduling of fine-grained workloads like cooperatively launching kernels from the GPU (a feature introduced in Volta). It means some newer multi-stream or multi-tenant scenarios on GPU that Ada handles with ease would be harder on Pascal.

Workarounds for AI on GTX 1080 Ti: Given its limitations, users of the 1080 Ti historically employed various tricks to train models. One common workaround was pseudo half-precision: storing weights/gradients in FP16 to save memory but doing calculations in FP32. This doubles effective memory capacity (e.g. 11 GB could store ~22 GB worth of FP32 values) (The Best GPUs for Deep Learning in 2023 — An In-depth Analysis), at the cost of extra conversion operations. It helped fit larger models on 1080 Ti and slightly reduce memory bandwidth usage, but didn’t speed up compute much. Another technique is gradient checkpointing (also known as rematerialization) – not unique to the 1080 Ti, but practically essential for training deeper networks on it. By not storing all intermediate activations and recomputing them during backprop, one can save memory at the expense of more compute – a trade-off often worthwhile on VRAM-starved GPUs. The 1080 Ti’s slower compute makes this painful (recomputing is slow), whereas the 4090 can handle it more easily if needed. In inference, 1080 Ti users often resort to 8-bit or 4-bit quantization to compress model size. While the 1080 Ti doesn’t have fast int8 hardware, a well-quantized model can at least reduce memory usage so that the GPU can load the model entirely, then use the CUDA cores for int8 arithmetic (DP4A instructions) (1. Pascal Tuning Guide — Pascal Tuning Guide 12.8 documentation). This is still far slower than 4090’s int8 on tensor cores, but it can make the difference between running a model vs not running at all on 11 GB. There are also multi-GPU tricks: before NVLink, some attempted model parallelism across 1080 Ti GPUs via PCIe. This incurs latency, but with careful partition (each GPU holds different layers of the model), it was a way to train models larger than 11 GB. The 4090 doesn’t require such complexity for most models until you hit truly massive scales.

Architectural Constraints in Practice: The 1080 Ti, due to its era, cannot leverage certain acceleration modes. For example, Tensor Core sparse acceleration (2:4 sparsity doubling math speed on Ampere/Ada) has no equivalent on Pascal – any benefit from sparsity on 1080 Ti would come from library-level optimizations that skip computations (which seldom yields as clean a 2× gain). Additionally, memory compression technology has improved over gens; Ada GPUs have more advanced lossless compression for framebuffers which can also help effective memory bandwidth for certain access patterns. Pascal had an earlier version of this compression. One might encounter scenarios on 1080 Ti where memory bandwidth becomes a ceiling, whereas on 4090 the combination of compression + L2 cache might alleviate that. Another subtle constraint: the 1080 Ti’s maximum compute capability is 6.1, so it doesn’t support cooperative groups and CUDA features introduced in 7.x like independent thread scheduling (which improved GPU warp scheduling flexibility). The 4090’s architecture supports these, meaning fewer cases where threads stall due to warp-level synchronization issues. From a theoretical perspective, the 4090 is so powerful that it can be bottlenecked by other system components – for example, feeding data to it fast enough can be an issue (hence the need for fast storage, CPU, etc.), whereas the 1080 Ti often was the bottleneck itself in training setups. It’s also worth noting the power/cooling constraints: the 4090 will throttle if not adequately cooled or if power-limited, but in a well-built system it can sustain its gigantic throughput. The 1080 Ti, being lower power, is easier to keep at max clocks but simply has far lower max performance to give. Finally, we should highlight that the GTX 1080 Ti was a legendary card in its time – it offered enthusiasts a taste of deep learning performance that was cutting-edge in 2017. But the field has moved fast. The RTX 4090, with its transformer-focused design, is in a completely different league, turning what might be a theoretical 10× FLOPS advantage into real-world 5–10× faster training in many cases (Four generations of Nvidia GPUs compared | Computer Vision Lab) (LLM Inference - Consumer GPU performance | Puget Systems). In summary, any AI model that can run on a 1080 Ti will run significantly faster on an RTX 4090, and models that are barely attainable on the 1080 Ti (due to size or compute needs) become practical on the 4090. The architectural leaps from Pascal to Ada – Tensor Cores, massive core counts, fast memory, huge caches, and improved instruction sets – all contribute to making the RTX 4090 a vastly superior GPU for modern AI training and inference.

Sources: NVIDIA product specifications (NVIDIA GeForce GTX 1080 Ti Specs | TechPowerUp GPU Database) (NVIDIA GeForce RTX 4090 Specs | TechPowerUp GPU Database), NVIDIA architecture whitepapers (1. Pascal Tuning Guide — Pascal Tuning Guide 12.8 documentation) (Ada Lovelace (microarchitecture) - Wikipedia), and independent benchmarks (Lambda Labs, Puget Systems, Tim Dettmers, etc.) (LLM Inference - Consumer GPU performance | Puget Systems) (Four generations of Nvidia GPUs compared | Computer Vision Lab) detailing deep learning performance across GPU generations. All claims and numerical comparisons in this report are backed by these sources, providing a comprehensive technical contrast between the GTX 1080 Ti and RTX 4090 for transformer model workloads and beyond.